Training Tesseract OCR on Kurdish Historical Documents

Table of Links

Abstract and 1. Introduction

1.1 Printing Press in Iraq and Iraqi Kurdistan

1.2 Challenges in Historical Documents

1.3 Kurdish Language

-

Related work and 2.1 Arabic/Persian

2.2 Chinese/Japanese and 2.3 Coptic

2.4 Greek

2.5 Latin

2.6 Tamizhi

-

Method and 3.1 Data Collection

3.2 Data Preparation and 3.3 Preprocessing

3.4 Environment Setup, 3.5 Dataset Preparation, and 3.6 Evaluation

-

Experiments, Results, and Discussion and 4.1 Processed Data

4.2 Dataset and 4.3 Experiments

4.4 Results and Evaluation

4.5 Discussion

-

Conclusion

5.1 Challenges and Limitations

Online Resources, Acknowledgments, and References

4 Experiments, Results, and Discussion

Initially, we collected some historical publications from the Zaytoon Public Library in Erbil. However, due to the fragile condition of the documents, it was not easy to transfer them into digital format. Then, via the internet, we found the Zheen Center for Documentation and Research in Sulaymaniyahn https://zheen.org, a facility specializing in scanning and archiving historical documents using unique technologies explicitly designed for that function. After visiting them and explaining our project, they agreed to provide us with digital copies of the earliest Kurdish publications they had in their collection.

4.1 Processed Data

To handle image processing tasks, we utilized a dedicated batch processing tool that was freely available. With this tool, we loaded the images and applied a de-skewing process to correct any skew present in the images. We also performed automatic cropping and converted the images to binary format, saving them in the specified destination directory.

4.2 Dataset

After receiving the historical documents from Zheen Center for Documentation and Research in a digital format, we converted the pages into single-line images with respected transcription for the line. We used an Image Processing application to crop lines and saved them in TIFF format.

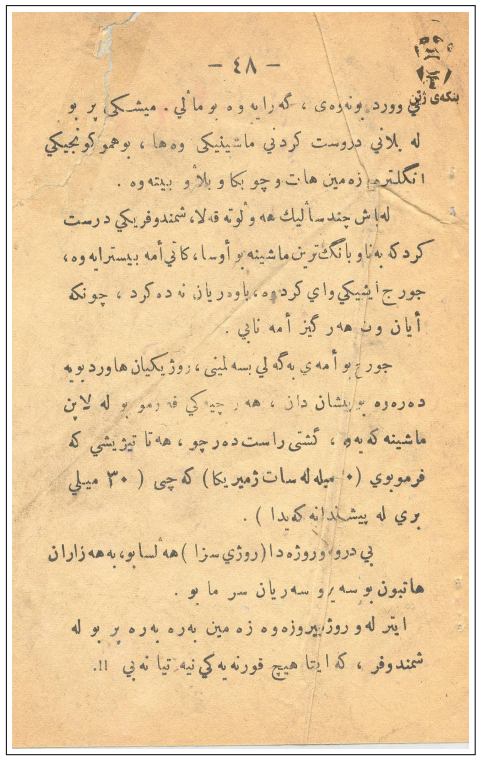

\ After converting the pages into image lines (See Figure 16), we created transcription files for each image line using a text editing program by manually typing what is written in the images.

\ \

\ \ We named the transcription files the same name as the image line with (.gt.txt) postfix (See Figure 17).

\ This way, the dataset for training Tesseract was created, which resulted in 1233 files. Half are the image lines, and the other is the transcription files (See Table 1).

4.3 Experiments

In this section, we provide details of the steps taken to prepare our environment, the training process of the model, and other relevant aspects.

\ 4.3.1 Environment Setup

\ For this training environment, we used Ubuntu 22.04.2 LTS (Jammy Jellyfish). We cloned the tesstrain from https://github.com/tesseract-ocr/tesstrain and we trained the model using our prepared dataset.

\

:::info Authors:

(1) Blnd Yaseen, University of Kurdistan Howler, Kurdistan Region - Iraq (blnd.yaseen@ukh.edu.krd);

(2) Hossein Hassani University of Kurdistan Howler Kurdistan Region - Iraq (hosseinh@ukh.edu.krd).

:::

:::info This paper is available on arxiv under ATTRIBUTION-NONCOMMERCIAL-NODERIVS 4.0 INTERNATIONAL license.

:::

\

คุณอาจชอบเช่นกัน

Highlights of Stanford HAI's 2025 Artificial Intelligence Index Report

Trading time: OM flash crash caused nearly 5.5 billion market value to evaporate, and BTC whale behavior was highly similar to the accumulation period in August-September last year