Backups, Not Burnout: What We Shipped in Postgresus 2.0 (and What We Dropped)

\ It's been 6 months since the first release of Postgresus. During this time, the project received 247 commits, new features, as well as ~2.8k stars on GitHub and ~42k downloads from Docker Hub. The project community has also grown, there are now 13 contributors and 90 people in the Telegram group.

In this article I'll cover:

- what changed in the project over six months;

- what new features appeared

- what plans are next.

\

What is Postgresus?

For those hearing about the project for the first time: Postgresus is an open source PostgreSQL backup tool with UI. It runs scheduled backups of multiple databases, saves them locally or to external storages, and notifies you when backups complete or fail.

The project deploys with a single command in Docker. It can be installed via shell script, Docker command, docker-compose.yml and now via Helm for Kubernetes. More about installation methods.

Besides the main feature "make backups", the project can:

- Store backups locally, in S3, CloudFlare R2, Google Drive, Azure Blob Storage and NAS. More details here.

- Send status notifications to Slack, Discord, Telegram, MS Teams, via email and to a configurable webhook. More details here.

- Separate databases by workspaces, grant access to other users and save audit logs. More details here.

- Encrypt backups and credentials. More details here.

- Work with both self hosted databases and cloud managed databases.

Website - https://postgresus.com/

GitHub - https://github.com/RostislavDugin/postgresus (would appreciate a star ⭐️)

The project interface looks like this:

Overview video: https://www.youtube.com/watch?v=1qsAnijJfJE

For those who want to participate in development, there's a separate page in the documentation. If someone wants to help the project but doesn't want to code — just tell your colleagues and friends about the project! This is also a big help and contribution to the project.

I know how to develop, but promoting even an open source project is quite difficult. The project gains recognition thanks to those who make video reviews and talk about the project in social media. Thank you!

New features appeared in version 2.0

A lot has changed over these six months. The project has been improved in four directions:

- expanded basic functionality;

- improved UX;

- appeared new capabilities for teams (DBAs, DevOps, teams, enterprises);

- improved protection and encryption to meet enterprise requirements.

Let's go through each one.

1) Database health check

Besides backups, Postgresus now monitors database health: it shows uptime and alerts you if a database was unavailable.

Health check can be disabled and configured.

If the database is unavailable — the system will send a notification about it.

2) New sources for storing backups and sending notifications

Initially Postgresus could only store backups locally and in S3. Google Drive, CloudFlare R2, Azure Blob Storage and NAS were added. Plans include adding FTP and possibly SFTP and NFS.

For notifications, initially the project had Telegram and email (SMTP). Now Slack, Discord, MS Teams and webhooks are also supported. Moreover, webhooks are now flexibly configured to connect to different platforms:

3) Workspace and user management

Previously the system had only one user (administrator), and databases were global for the entire system. Now Postgresus supports creating workspaces to separate databases and adding users to workspaces. With role separation:

- view only (can view backup history, download backup files);

- edit (can add\delete\modify databases in addition to reading).

Consequently, you can now separate databases:

- client X databases;

- startup Y databases;

- team Z databases;

DBA teams and large outsourcing companies started using Postgresus, so this became an important feature. It makes the project useful not only to individual developers, DevOps or DBAs, but to entire teams and enterprises.

Audit logs also appeared:

If someone decided to delete all databases or for some reason downloaded all backups of all databases — this will be visible 🙃

4) Encryption and protection

In the first version, honestly, I didn't have time for security. I stored all backup files locally, no one had access to them, and my projects weren't exactly top secret.

But when Postgresus went open source, I quickly learned that teams often save backups to shared S3 buckets and don't want others reading them. Database passwords shouldn't be stored in Postgresus's own DB either, since many people have access to its servers. ~~And there's some distrust of each other.~~ Simply not exposing secrets via API is no longer enough.

So, encryption and security of the entire project became the main priority for Postgresus. Protection now works at three levels, and there's a dedicated documentation page for this.

1) Encryption of all passwords and secrets

All database passwords, Slack tokens and S3 credentials are stored encrypted in Postgresus's database. They're decrypted only when needed. The secret key is stored in a separate file from the DB, so even if someone hacked Postgresus's DB (which isn't exposed externally anyway) — they still couldn't read anything. Encryption uses AES-256-GCM.

2) Encryption of backup files

Backup files are now also encrypted (optionally, but encryption is enabled by default). If you lost a file or saved it in public S3 — it's not so scary anymore.

Encryption uses both salt and a unique initialization vector. This prevents attackers from finding patterns to guess the encryption key, even if they steal all your backup files.

Encryption is done in streaming mode, AES-256-GCM is also used here.

3) Using read only user for backups

Despite the first two points, there's no 100% protection method. There's still a tiny chance that:

- your server will be completely hacked, they'll get the secret key and legitimate IP address to access the database;

- a hacker will somehow decrypt passwords in Postgresus's internal DB and find out your database password;

- or, worse, it won't be a hacker but someone from inside;

- and they'll corrupt your database, having previously deleted backups.

So Postgresus should help users minimize damage. The probability of such a hack may be near zero, but that's cold comfort if you're the one it happens to.

Now when you add a database user with write permissions to Postgresus, the system offers to automatically create a read only user and run backups through it. People are much more likely to create a read-only role when it takes one click instead of manually setting it up in the database.

Here's how Postgresus offers:

Very persistently offers:

With this approach, even if your Postgresus server gets hacked, everything gets decrypted and attackers gain access to your DB — they at least won't be able to corrupt the database. Knowing that not everything is lost is a pretty good consolation.

5) Compression by default, the most optimal one

The first version of Postgresus offered all compression algorithms that PostgreSQL supports: gzip, lz4 and zstd, with compression levels from 1 to 9. Honestly, I didn't really understand why anyone would need all these options. I just picked gzip with level 5 as what seemed like a reasonable middle ground.

But once the project went open source, I had to actually research this. So I ran 21 backups in all possible combinations and found the best trade-off between speed and size.

So now by default for all backups zstd with compression level 5 is set, if PostgreSQL version is 16 and higher. If lower — still gzip (by the way, thanks again to contributors for PG 12 support). Here's zstd 5 compared to gzip 5 (it's at the bottom):

On average, backups are compressed ~8 times relative to the actual database size.

6) Kubernetes Helm support

This one is quick — we added native k8s support with Helm installation. Teams running k8s in the cloud can now deploy Postgresus more easily. Three contributors helped with this feature.

7) Dark theme

I'm not really a fan of dark themes. But there were many requests, so I added one (~~thanks Claude for the help and designer's eye~~). Surprisingly, it turned out better than the light theme and became the default. I even redesigned the website from light to dark — it looked that good.

Before:

After:

8) Get rid of incremental backups and PITR (Point In Time Recovery)

First, some context:

- Logical backup — is when PostgreSQL itself exports its data (to a file or group of files).

- Physical backup — is when we connect to PostgreSQL's hard drive and make a copy of its files.

- Incremental backup with PITR support — is a physical backup + change log (WAL), copied from disk or via replication protocol.

I believed Postgresus should eventually support incremental backups. After all, that's what serious tools do! Even ChatGPT says serious tools can recover with precision down to the second and transaction.

So I started working on it. But then reality hit:

- It's very hard to make it convenient in terms of UX and DevEx. Because you need to either physically connect to the disk with the DB, or set up replication.

For recovery — there's no option not to connect to the disk with the database. To recover from an incremental backup, the backup tool simply restores the pgdata folder (more precisely, part of it).

If the database is really big, for example, several TB and more — fine-tuning in configs is needed. Here UI is more of a hindrance than help.

- Most clouds (AWS, Google, Selectel) won't work with external incremental backups, if they require disk access. In theory, with some workarounds, via replication — they will. But recovering from such a backup to a managed cloud still won't work anyway.

- All clouds provide incremental backups out of the box. In general, this is one of the reasons why they're paid. And even they usually don't allow recovery second by second or to a specific transaction moment. And if you're not in the cloud — even more unlikely that your project is critical enough to require such precision.

Therefore, if Postgresus is making a backup of a managed DB — it's enough to do it roughly once a week. Just in case of unforeseen emergency or if the cloud doesn't allow storing backups long enough. Otherwise, rely on the cloud's own incremental backups.

- For most projects, incremental backups aren't really that necessary. For small databases, granularity between backups of one hour is enough, if needed frequently. For large ones — at least once a day. This is enough for 99% of projects to find lost data or recover completely. These needs are fully covered by logical backups.

But if you're a bank or a managed DB developer, you really need the finest backup configuration for your tens and hundreds of terabytes of data. Here Postgresus will never outperform physical backups from WAL-G or pgBackRest in terms of console convenience and efficiency for DBs with volume in TB and more. But, in my opinion, these are already specialized tools for such tasks, made by geniuses and maintainers of PostgreSQL itself.

In my opinion, incremental backups are really needed in two cases:

- In the past, when there wasn't such a choice of cloud managed databases and large projects required hosting everything yourself. Now the same banks, marketplaces and telecom have internal clouds with PITR out of the box.

- You are a managed cloud. But here the task is radically more complex than just making backups and recovering from them.

Considering all this, I made a deliberate decision to drop incremental backup development. Instead, I'm focusing on making logical backups as convenient, reliable and practical for daily use by developers, DevOps, DBAs and companies.

9) Many small improvements

The points above are far from everything. 80% of the work usually isn't visible. Off the top of my head, here's a short list:

- Buffering and RAM optimization. Postgresus no longer tries to buffer everything

pg_dumpsends while waiting for S3 to catch up (downloading from database is usually faster than uploading to cloud). RAM usage is now limited to 32 MB per parallel backup. - Various stability improvements and minor bug fixes.

- Adding SMTP without username and without password. I didn't even know that happens…

- Adding topics for sending notifications to Telegram groups.

- Documentation appeared!

- PostgreSQL 12 support.

And much more.

What didn't work out?

Of course, not everything works out and some things have to be dropped. I'm building Postgresus in my spare time, which barely exists. So I can't spread myself too thin or complicate UX with unnecessary features. Too many features is also bad.

1) Full database resource monitoring

I wanted to make Postgresus a PostgreSQL monitoring tool as well. Including system resources of the server running PostgreSQL:

- CPU

- RAM

- ROM

- IO speed and load

I even made the base for collecting metrics (any) and a template for graphs:

Turns out PostgreSQL only exposes RAM usage and IO metrics out of the box.

Monitoring other resources requires extensions. But not every database can install the extensions I need, so I could only collect partial metrics. Then I discovered that cloud databases often don't allow installing extensions at all.

Then I realized metrics should be stored in VictoriaMetrics or at least TimescaleDB, not in Postgresus's own PostgreSQL, which would complicate the image build.

In the end, this non-essential feature would add:

- significant code complexity, meaning harder maintenance;

- more image build requirements (additional components);

- complicated UX (as I said, too many features is bad);

- ~~unclear positioning: are we a backup tool, a monitoring tool, or trying to do everything?~~

So I dropped resource monitoring and focused on making Postgresus the best backup tool it can be.

2) SQL console

I also wanted to add an SQL console. Since Postgresus is already connected to the DB, why not run queries directly from it? It would be convenient — no need to open PgAdmin, DataGrip or DBeaver every time.

I never found time to work on it, only planned. One contributor started on the feature and made a PR. But as expected with complex features, many requirements and edge cases came up:

- need to add SQL syntax support;

- add autocomplete and hints;

- make lazy loading so that even 100MB of rows don't come to the browser;

- add at least several exports to CSV and XLSX.

That's basically a full project on its own, not just one tab.

We decided to drop the feature and save the effort. This turned out to be the right call, since we added read only roles that don't allow INSERT, UPDATE and DELETE anyway.

Conclusion

That's the journey Postgresus has made in six months. It grew from a niche project to an enterprise-level tool that helps both individual developers and entire DBA teams (I was surprised to learn this just ~2 months after the first release, by the way). I'm genuinely glad that thousands of projects and users rely on Postgresus.

The project isn't stopping here. My goal now is to make Postgresus the most convenient PostgreSQL backup tool in the world. There's a large backlog of features and improvements coming gradually.

My main priorities remain:

- project security — this is critical, without it everything else doesn't make sense;

- convenient UX in terms of using the project itself and no excess of features (we do one task, but very well);

- convenient UX in terms of deployment: so that everything deploys with one command and nothing needs to be configured out of the box;

- project openness — completely open source and free for any person or company.

If you like the project or find it useful — I'd appreciate a star on GitHub or sharing it with friends ❤️

\

You May Also Like

New Gold Protocol's NGP token was exploited and attacked, resulting in a loss of approximately $2 million.

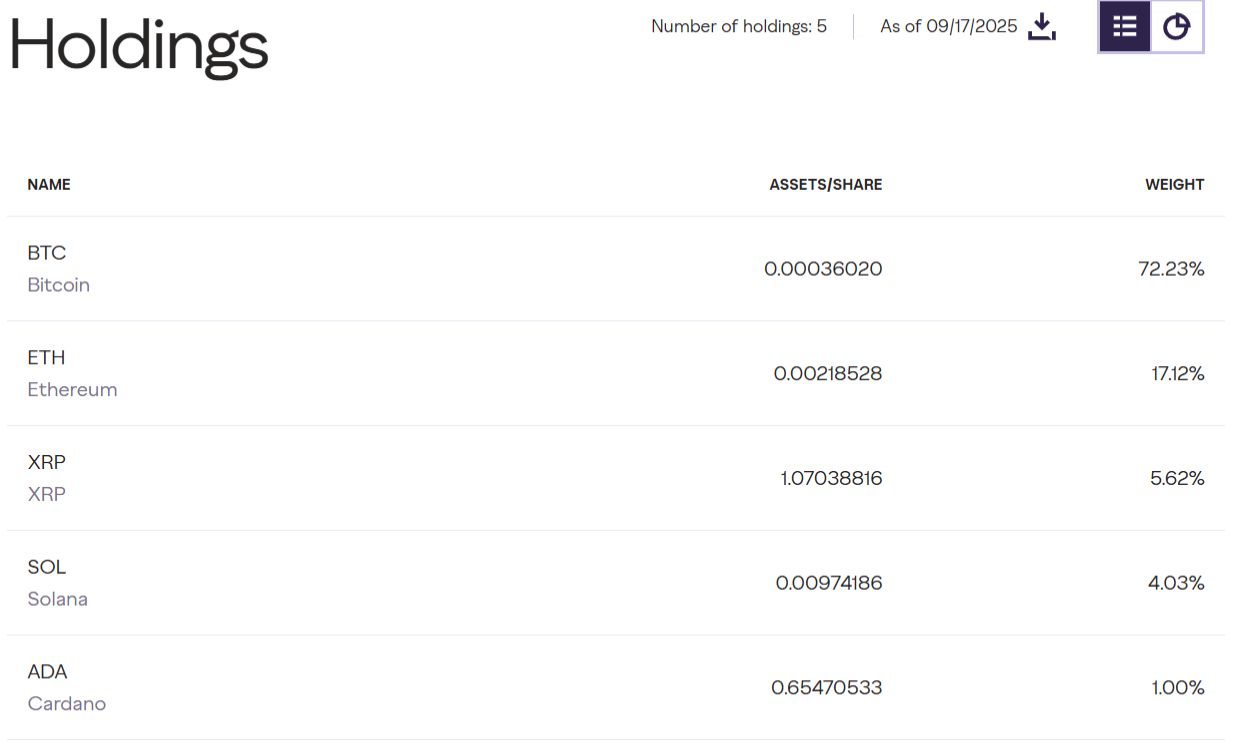

Grayscale’s Crypto Large Cap Fund, Including BTC, ETH, XRP, ADA, Gets SEC Approval